Yesterday I had the opportunity to address the Senate Standing Committee on Legal and Constitutional Affairs, who are holding an inquiry on the Criminal Code Amendment (Deepfake Sexual Material) Bill 2024.

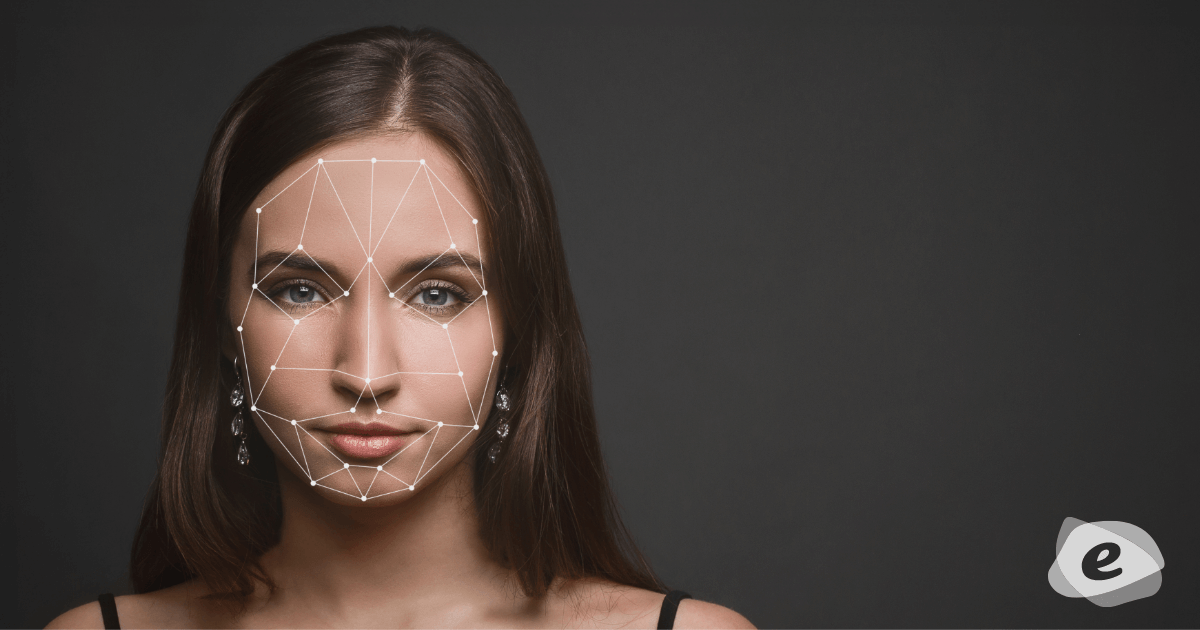

The Bill would strengthen offences targeting the creation and non-consensual sharing of sexually explicit material online, including material that has been created or altered using AI technology, commonly referred to as ‘deepfakes’.

A growing problem

There is compelling and concerning data that explicit deepfakes have increased on the internet as much as 550% year on year since 2019. It’s a bit shocking to note that pornographic videos make up 98% of the deepfake material currently online and 99% of that imagery is of women and girls. So, let me start by saying deepfake image-based abuse is not only becoming more prevalent, but it is also very gendered and incredibly distressing to the victim-survivor.

There are two areas of deepfake abuse I am particularly concerned about. These are the perpetuation of deepfake image-based abuse, and the creation of synthetic child sexual abuse material. While I’m aware child sexual abuse material is covered elsewhere under the Criminal Code, the issues are inextricable and germane to this broader conversation and the melding of harms type we’re seeing coming into our investigative division.

I’d also like to state upfront that we support the deepfake legislation at the heart of today’s hearing. Criminalisation of these actions is entirely appropriate, serving an important deterrent function while expressing our collective moral repugnance to this kind of conduct.

I believe that the Bill adds powerfully to the existing interlocking civil powers and proactive safety by design interventions championed by eSafety. Through these, we should feel justified putting the burden on AI companies themselves to engineer out potential misuse.

Apps that ‘nudify’

Here is the description of a popular open-source AI “nudifying app”:

“Nudify any girl with the power of AI. Just choose a body type and get a result in a few seconds.”

And another:

“Undress anyone instantly. Just upload a photo and the undress AI will remove the clothes within seconds. We are the best deepnude service.”

It is difficult to conceive of a purpose for these apps outside of the nefarious. Some might wonder why apps like this are allowed to exist at all, given their primary purpose is to sexualise, humiliate, demoralise, denigrate or create child sexual abuse material of girls according to the predator’s personal predilection. A Bellingcat investigation found that many such apps are part of a complex network of nudifying apps, owned by the same holding company, that effectively disguises detection of the primary purpose of these apps in order to evade enforcement action.

Shockingly, thousands of open-source AI apps like these have proliferated online and are often free and easy to use by anyone with a smartphone. These apps make it simple and cost-free for the perpetrator, while the cost to the target is one of lingering and incalculable devastation.

Countering deepfakes

I'm pleased to say that the mandatory standards we've tabled in Parliament are an important regulatory step to ensure proper safeguards to prevent child exploitation are embedded into high-risk, consumer-facing open-source AI apps like these. The onus will fall on AI companies to do more to reduce the risk their platforms can be used to generate highly damaging content, such as synthetic child sexual exploitation material and deepfaked image-based abuse involving under-18s.

These robust safety standards will also apply to the platform libraries that host and distribute these apps.

Companies must have terms of service that are robustly enforced and clear reporting mechanisms to ensure that the apps they are hosting are not being weaponised to abuse, humiliate and denigrate children.

We have already begun to see deepfake child sexual abuse material, non-consensual deepfake pornography and cyberbullying content reported through our complaint schemes. Due to some of the early technology horizon scanning work that eSafety conducted around deepfakes in 2020, each of our complaints schemes covers synthetically generated material.

Our concerns about these technologies have led to strong actions. These include through mandatory industry codes tackling child sexual abuse and pro-terror material, which apply to search engines and social media sites.

In the case of deepfake pornography against adults – which we treat as image-based abuse – we will continue to provide a safety net for Australians whose digitally created intimate images proliferate online.

We have a high success rate in this area and using our remedial powers under the image-based abuse scheme, we have recently pursued ground-breaking civil action against an individual in the Federal Court for breaching the Online Safety Act through his creation of deepfake image-based abuse material.

A virtual problem that carries real-world dangers

As we outline in our submission to this Committee, the harms caused by image-based abuse have been consistently reported.

They include negative impacts on mental health and career prospects, as well as social withdrawal and interpersonal difficulties.

Victim-survivors have also described how their experiences of image-based abuse radically disrupted their lives, altering their sense of self, identity and their relationships with their bodies and with others.

It’s also worth noting another concern we share with the hotline and law enforcement community: the impact that the continued proliferation of synthetic child sexual abuse material will have on the volumes of content our investigators are managing. We want to ensure that our efforts are put towards classifying, identifying and working towards finding real children who are being abused, but there are also other major technical concerns.

The first is that deepfake detection tools are lagging significantly behind the freely available tools being created to perpetuate deepfakes. These are becoming so realistic, they are difficult to discern with the naked eye. Rarely are these images accompanied by content-identification or provenance watermarks to help detect when material is AI-generated.

The second is that synthetic child sexual abuse material will seldom be recognised as “known child sexual abuse material”. In order for the material to be ‘hashed’ (or fingerprinted) by an organisation such as the US-based National Center for Missing and Exploited Children or ourselves, it first must be notified by law enforcement or the public. This material can be produced and shared faster than it can be reported, triaged, and analysed.

This fact is already challenging the global system of digital hash matching we’ve worked so hard to build over the past two decades, as AI-generated material overwhelms investigators and hotlines.

A holistic approach is needed

As I have said many times, we will not arrest our way out of these challenges – and indeed, many victim-survivors that come to us through our complaints schemes just want the imagery taken down, rather than to pursue a criminal justice pathway. So too, we will not be able to simply regulate our way out of these harms. Instead, we will need to ensure that safety by design is a consideration through every element of the design, development and deployment process of both proprietary and open-source AI apps.

The risks are simply too high if we let these powerful apps proliferate into the wild without adequate safeguards from the get-go.

And, if the primary purpose for the creation of the application is to inflict harm and perpetuate abuse, then we should take a closer look at why such apps are allowed to be created in the first place. I believe that it is only through this multi-faceted approach that we can tackle these rapidly proliferating AI-generated harms. We support this important Bill and will also pursue effective prevention, protection and proactive efforts to tackle these challenges from multiple vantage points.

This is an edited version of eSafety Commissioner Julie Inman Grant’s opening statement to the Senate Standing Committee on Legal and Constitutional Affairs’ inquiry on the Criminal Code Amendment (Deepfake Sexual Material) Bill 2024.